🎸 Alpha Users = Rockstars, 💰 NYT x Amazon Licensing, 🌑 AI for Blackouts

Volume 27

Silicon Society is building the future of learning through AI-native job shadowing at scale.

Want to be an early SiSo platform user? Join our Beta waitlist!

Need something built by our agency team? Grab a time to chat.

We’re building the future of learning, out loud.

➡️ We are so grateful to our earliest users who have been casting and viewing shadows at lightning speed and giving us incredible feedback. We’re sprinting towards our beta feature set and slowly letting in users off our waitlist.

Want access for your team? Grab a time to discuss.

➡️ It’s become very clear very quickly that our AI-powered learning platform has wide applications outside of engineering. Nice. Just over the past week, we’ve had a diverse array of shadows added to the library including:

Learning Python: Trying out Django

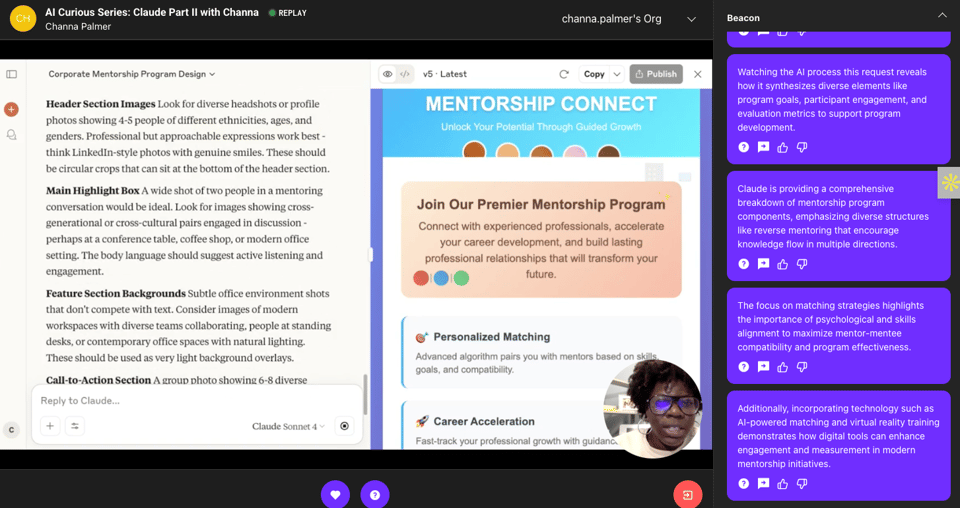

AI Curious Series: Claude Part II

Building an AI Agent

Job Search Mini Series: Prentus & Teal

Ask a Career Coach

And more!

Curated media from the past few weeks:

New York Times Partners with Amazon for first AI Licensing Deal

Given their gnarly lawsuit with OpenAI, this deal is noteworthy for its terms and the NYT’s willingness to experiment with an AI distribution system. Plus, Amazon gets more data for model training. Shall we say a win-win?

🗯️Quotable Bit: “The deal comes as AI companies strive to overcome difficulties in improving their large-language models after exhausting all the easily accessible data in the world. Many, including ChatGPT-owner OpenAI, are also facing lawsuits related to data usage.”

We love a good AI-for-societal-good think piece and this one on research being done so AI can decrease outages globally does not disappoint. Read about how the UK’s Advanced Research and Invention Agency is leading the charge.

🗯️Quotable Bit: “At the heart of Safeguarded AI is the goal of mathematically representing what “safe” looks like in each specific setting, and then enforcing that requirement upon AI to safeguard it for that setting. To develop these mathematical safety proofs, we work with domain experts supported by AI to translate real-world safety requirements — like 'never let voltage drop below X level' or 'always maintain Y minutes of backup power' — into precise mathematical equations.”

Do AI systems have moral status?

Does AI seem close to personhood right now? Nope. But are there signs that we can get close? Yup. This level headed analysis by the Brookings Institute lays out the open questions, what we should be monitoring, and frankly, why this shouldn’t be the top issue we research today.

🗯️Quotable Bit: “Ultimately, our AI models might belong on the continuum of personhood, somewhere above animals but below human status. What that means for ethics, law, and policy is a challenge we will have to face at some point if AI models continue to develop.”